On Customer Side Cloud Security

Cloud computing encapsulates IT resources to be delivered as services over the network. As a result, Cloud customers can purchase and configure Cloud services on-demand with minimal expenses or management overhead. In spite of the benefits, Cloud systems are susceptible to a variety of security issues, e.g., unauthorized accesses or denial of service (DoS) attacks. Therefore, security properties of Cloud services are of equal importance as service quality attributes. To manage these security properties, secSLAs [2] were proposed as contracts to explicitly specify the service security CSPs are supposed to provide. In a secSLA, security properties of the provided service are specified along with so-called service level objectives (SLOs). An SLO is defined by a target value for a security property committed to be achieved by the CSP. In other words, SLOs quantify security properties defined in secSLAs. To validate whether provided services fail to meet the specified SLOs, the specified security properties need to be monitored at run time.

Such Cloud monitoring is commonly deployed at the provider side, i.e., CSPs measure and validate SLOs, while CSCs commonly only receive a report of the SLO validation. This practice obviously undermines the utility of secSLAs as contracts, since the validation of the contracted properties is effectively only conducted by a single party.

To alleviate this issue, Alboghdady et al. [1] have recently proposed a security monitoring mechanism for client-side validation of SLOs. However, due to the limited access to the technical infrastructure and internal data that CSPs provide to their customers, the number of client-side measurable SLOs is low compared to the number of SLOs assessable by CSPs. Based on this observation, we propose a monitoring framework which 1) provides access to CSP internal data to CSCs, 2) is compatible with the SLOs that do not require internal data, and 3) introduces moderate overhead.

At the current stage, our framework still requires a trustworthy CSP in the sense that it does not tamper with the internal measurement results that are reported back to the CSC. However, combining the proposed mechanisms with trusted computing techniques, such as remote attestation [3], can overcome this limitation.

The implementation of the monitoring framework required a review of all SLOs that were not covered by the work of Alboghdady et al. For this purpose, we reviewed 97 SLOs from a variety of different catalogs developed in academic and industrial projects [4]–[8]. We find that a significant fraction of those SLOs cannot be measured by security mechanisms because of ambiguous or non-technical definitions, e.g., the amount of CSP’s budget used for the security cannot be automatically measured if the corresponding data is not suitably labeled. From the 21 measurable SLOs we identified, we support 13 in the prototype implementation of our monitoring framework. Since the internal data needed by all measurable SLOs are closely related to Cloud infrastructure properties, our framework is deployed and evaluated on the popular IAAS (Infrastructure As A Service) platform OPENSTACK [9].

The SLOs for the CSC monitoring framework are from the following spcifications:

- NIST SP 800-55 [4] provides a set of security performance measures related to programs or information systems.

- CIS [5] presents standard security control metrics that cover a wide range of business functions (e.g., incident management, vulnerability management, etc.).

- CUMULUS [6] devotes the specification of a security property vocabulary that describes the definitions and measurement methods for security properties available in SLAs.

- A4Cloud [7] provides a collection of metrics to validate the compliance of non-functional requirements in term of security, privacy, or the accountability of an organization, etc.

- SPECS [8] presents a list of security metrics defined for the SPECS service developed.

The complete SLO analysis and discussions can be found in https://sites.google.com/view/sec-metrics/home.

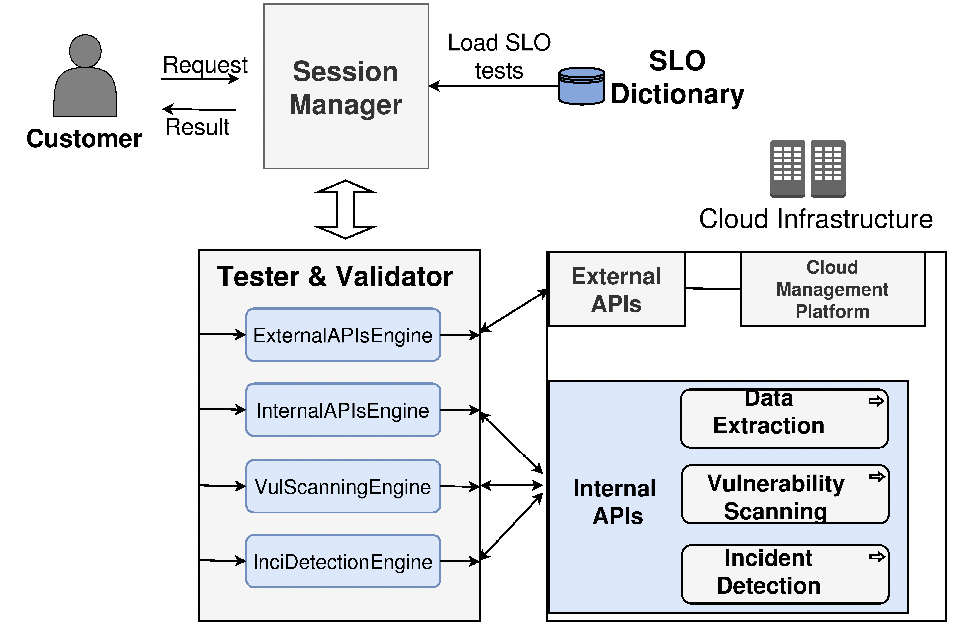

Our proposed framework for CSC side secSLA validation continuously monitors SLO values throughout a service life cycle and compares the measured against contracted values. Figure 1 provides a comprehensive overview of the monitoring framework. The key components in the framework are first introduced in detail, and then the work flow involved is described.

- Session Manager: The session manager is the core component of the monitoring framework and is responsible for managing and coordinating the work among other components, such as CSC interactions, the initialization of SLO measurements, or the management of the Tester & Validator.

- SLO Dictionary: The measurement instances of SLOs are incorporated into the SLO dictionary. Upon the monitoring request from the customer, the session manager loads corresponding SLO measurement instances from this component.

- Tester & Validator: The tester and validator component initiates measurements based on the tests it receives from the session manager. For validation, it captures changes of SLO values in real time to detect security violations throughout the service life cycle. This component consists of four independent engines that perform the monitoring and validation of the SLOs requested by the customer.

- Internal APIs: The component is integrated with the CSP’s Cloud infrastructure, which constitutes the key novelty in the proposed monitoring approach, and contains three engines that collect the internal data required by SLOs, perform vulnerability scans on nodes of the Cloud system, and automatically detect incidents that occur in the cloud environment.

At the beginning, the customer makes a request for monitoring and validating SLOs. Upon receipt of the request, the session manager retrieves the SLO targets in the secSLA, loads the measurement instances of the SLOs requested from the SLO dictionary, and starts a session with the CSP to collect the data necessary for the SLO measurements through the four engines. Afterwards, the measured values of the SLOs are compared with the targets in the secSLA to detect violations. Finally, once SLO violations are identified, the customer is notified.

Reference

- Soha Alboghdady, Stefan Winter, Ahmed Taha, Heng Zhang, and Neeraj Suri. 2017. C’mon: Monitoring the Compliance of Cloud Services to Contracted Properties. In Proceedings of the 12th International Conference on Availability, Reliability and Security (ARES ’17). Association for Computing Machinery, New York, NY, USA, Article 36, 1–6. DOI:https://doi.org/10.1145/3098954.3098967

- J. Luna, A. Taha, R. Trapero and N. Suri, “Quantitative Reasoning about Cloud Security Using Service Level Agreements,” in IEEE Transactions on Cloud Computing, vol. 5, no. 3, pp. 457-471, 1 July-Sept. 2017, doi: 10.1109/TCC.2015.2469659.

- George Coker, Joshua Guttman, Peter Loscocco, Amy Herzog, Jonathan Millen, Brian O’Hanlon, John Ramsdell, Ariel Segall, Justin Sheehy, and Brian Sniffen. 2011. Principles of remote attestation. Int. J. Inf. Secur. 10, 2 (June 2011), 63–81. DOI:https://doi.org/10.1007/s10207-011-0124-7

- “Performance and Measurements Guide for Information Technology,” Tech. Rep. NIST 800-55 Revision 1, National Institute of Standards and Technology, 2008.

- “The CIS Security Metrics v1.1.0,” tech. rep., The Center for Internet Security, 2010.

- “D2.1 Security-Aware SLA Specification Language and Cloud Security Dependency model,” tech. rep., Certification infrastrUcture for MUlti- Layer cloUd Services, September 2013.

- D. Nunez and C. Fernandez-Gago, “D:C-5.2 Validation of the Accountability Metrics,” tech. rep., Accountability For Cloud and Other Future Internet Services (A4Cloud), October 2014.

- “Report on conceptual framework for Cloud SLA negotiation – Final,” Tech. Rep. Deliverable 2.1.2, Secure Provisioning of Cloud Services based on SLA Management, October 2014.

- https://www.openstack.org/

(By Yiqun Chen, Lancaster University)